Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

Allocator (GPU_0_bfc) ran out of memory trying to allocate 17.49MiB with freed_by_count=0. – jentsch.io

python - OoM: Out of Memory Error during hyper parameter optimization with Talos on a tensorflow model - Stack Overflow

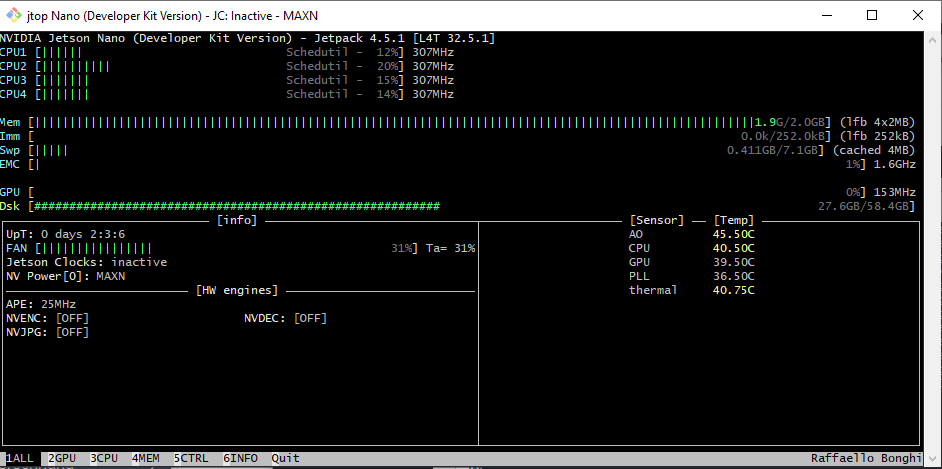

![Resource exhausted: OOM when allocating tensor with shape[256] - Jetson Nano - NVIDIA Developer Forums Resource exhausted: OOM when allocating tensor with shape[256] - Jetson Nano - NVIDIA Developer Forums](https://global.discourse-cdn.com/nvidia/optimized/3X/e/d/ed0e2da927da7415b723cba551722119e6ce1842_2_690x298.png)

Resource exhausted: OOM when allocating tensor with shape[256] - Jetson Nano - NVIDIA Developer Forums

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.39GiB with freed_by_count=0. · Issue #1303 · tensorpack/tensorpack · GitHub

Problem In tensorflow-gpu with error "Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.20GiB with freed_by_count=0." · Issue #43546 · tensorflow/tensorflow · GitHub

Problem In tensorflow-gpu with error "Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.20GiB with freed_by_count=0." · Issue #43546 · tensorflow/tensorflow · GitHub

Allocator (GPU_0_bfc) ran out of memory · Issue #12 · aws-deepracer-community/deepracer-for-cloud · GitHub

Training produces Out Of Memory error with TF 2.* but works with TF 1.14 · Issue #39574 · tensorflow/tensorflow · GitHub

Problem In tensorflow-gpu with error "Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.20GiB with freed_by_count=0." · Issue #43546 · tensorflow/tensorflow · GitHub

Problem In tensorflow-gpu with error "Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.20GiB with freed_by_count=0." · Issue #43546 · tensorflow/tensorflow · GitHub