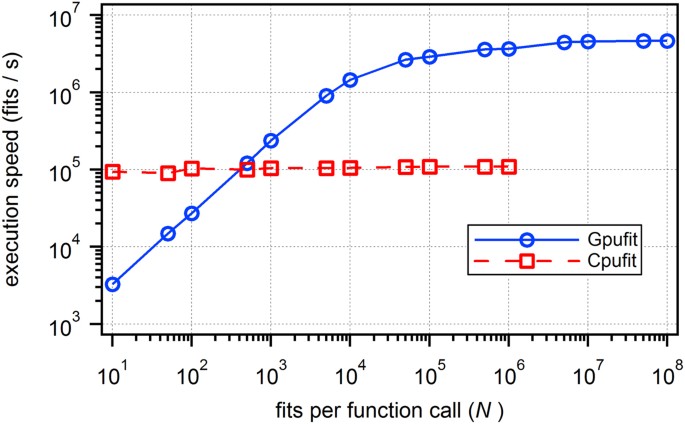

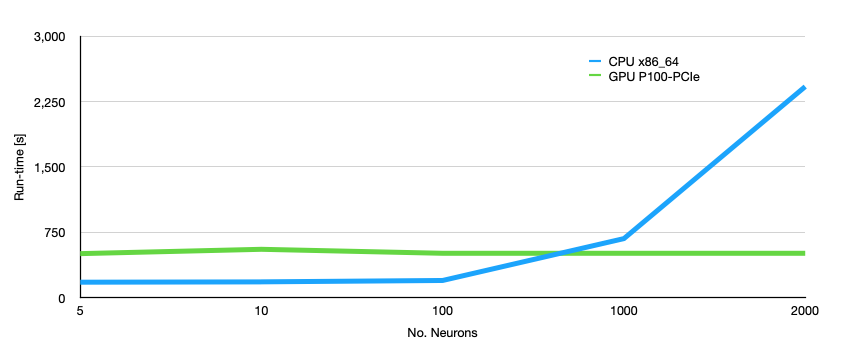

Comparison of HYDRA performance on single CPU and GPU. The starting... | Download Scientific Diagram

1D FFT performance test comparing MKL (CPU), CUDA (GPU) and OpenCL (GPU). | Download Scientific Diagram

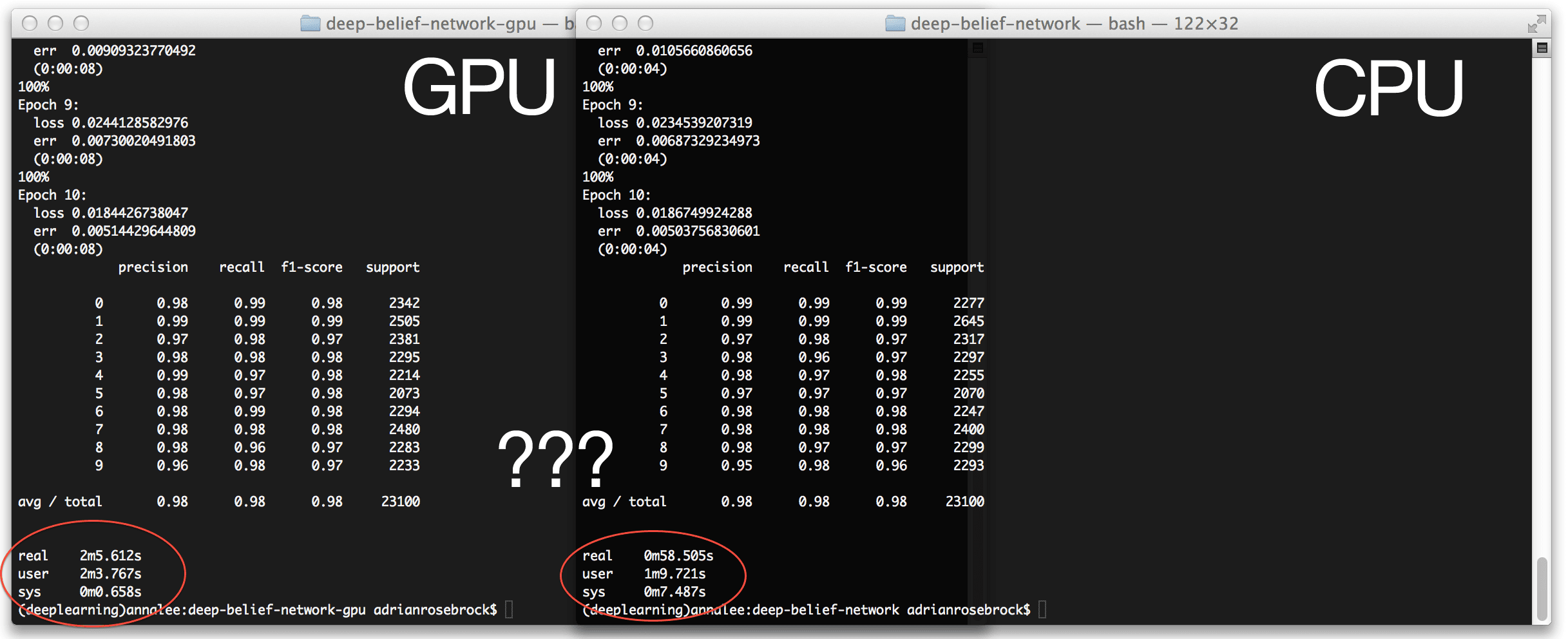

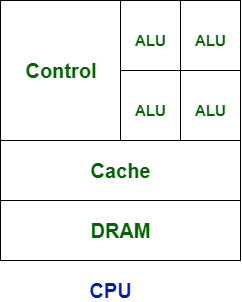

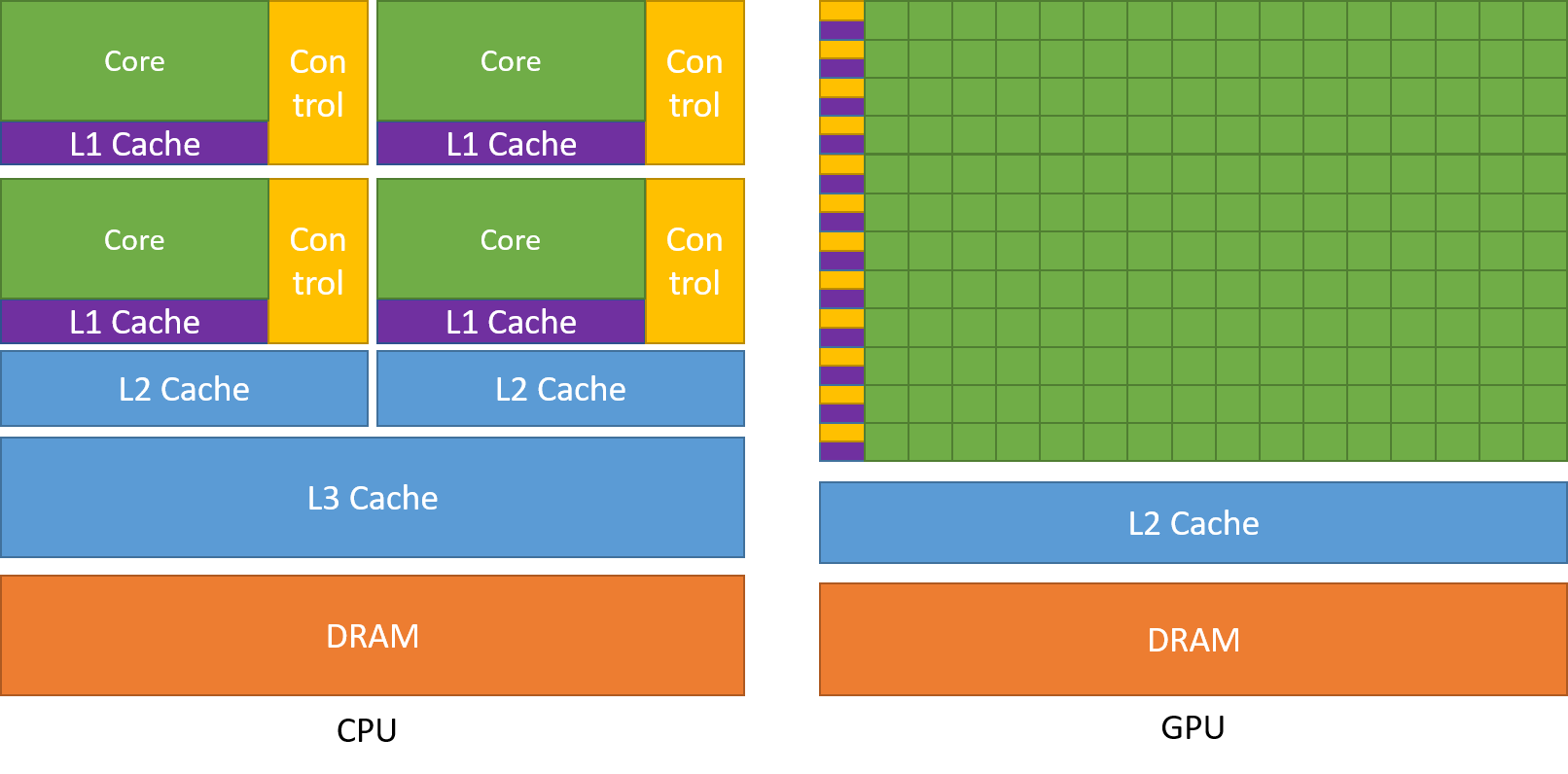

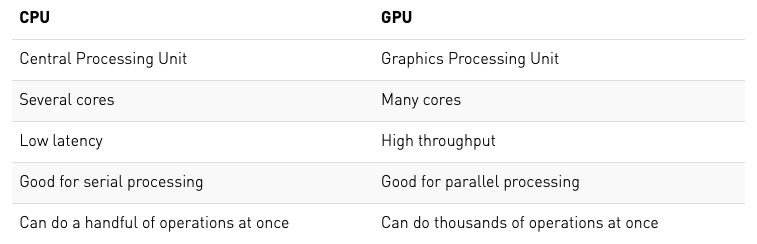

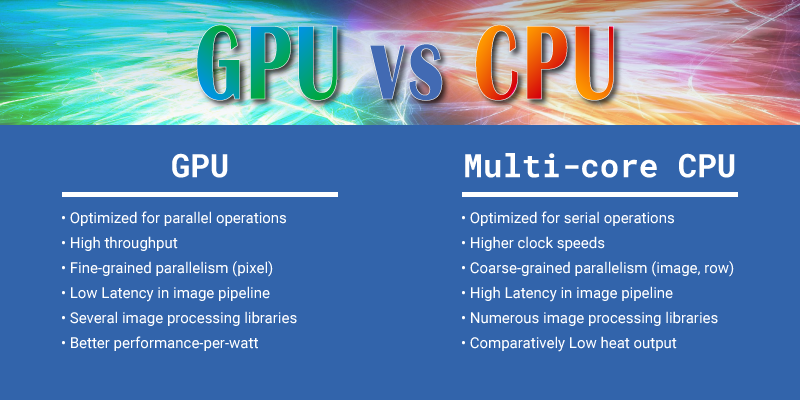

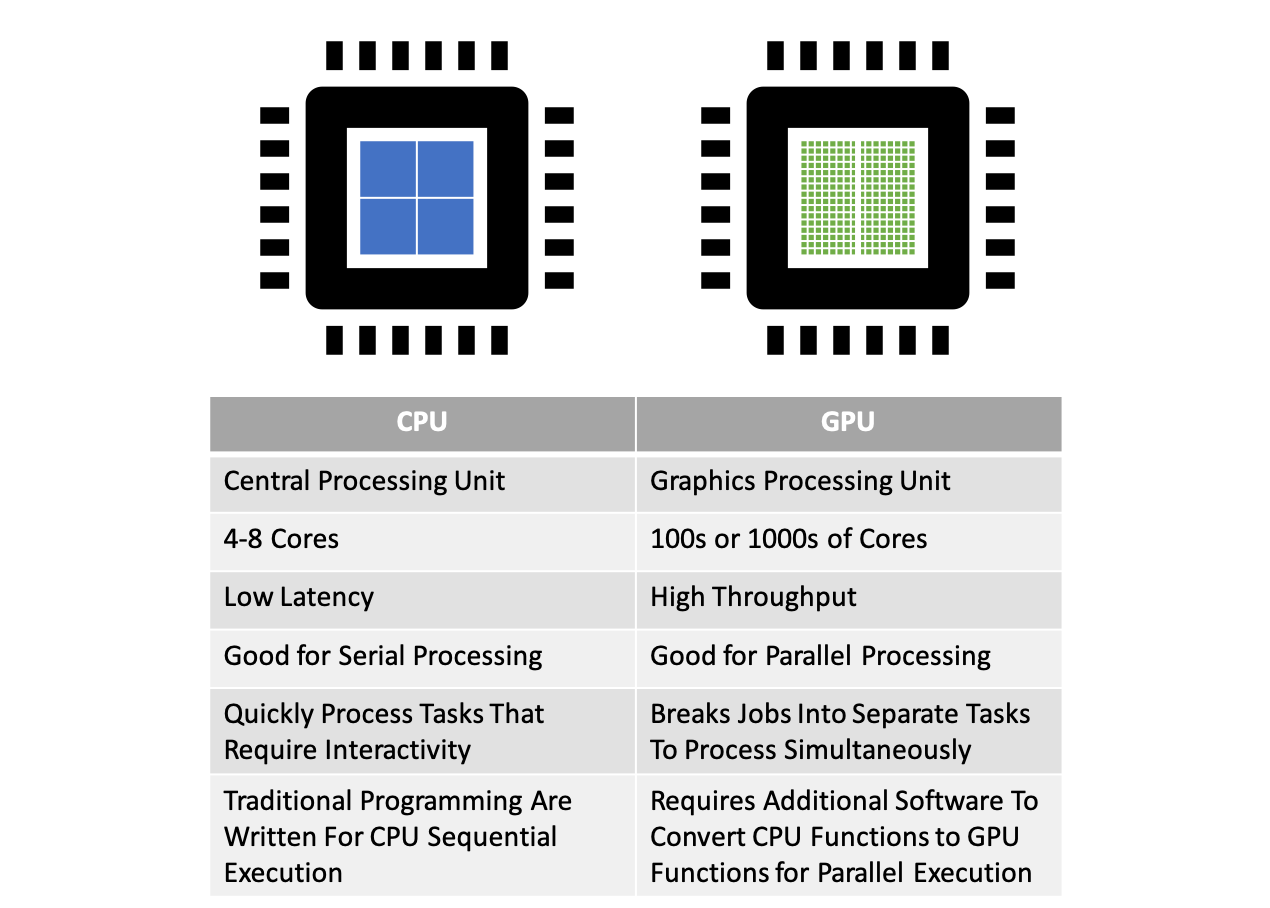

Parallel Computing — Upgrade Your Data Science with GPU Computing | by Kevin C Lee | Towards Data Science

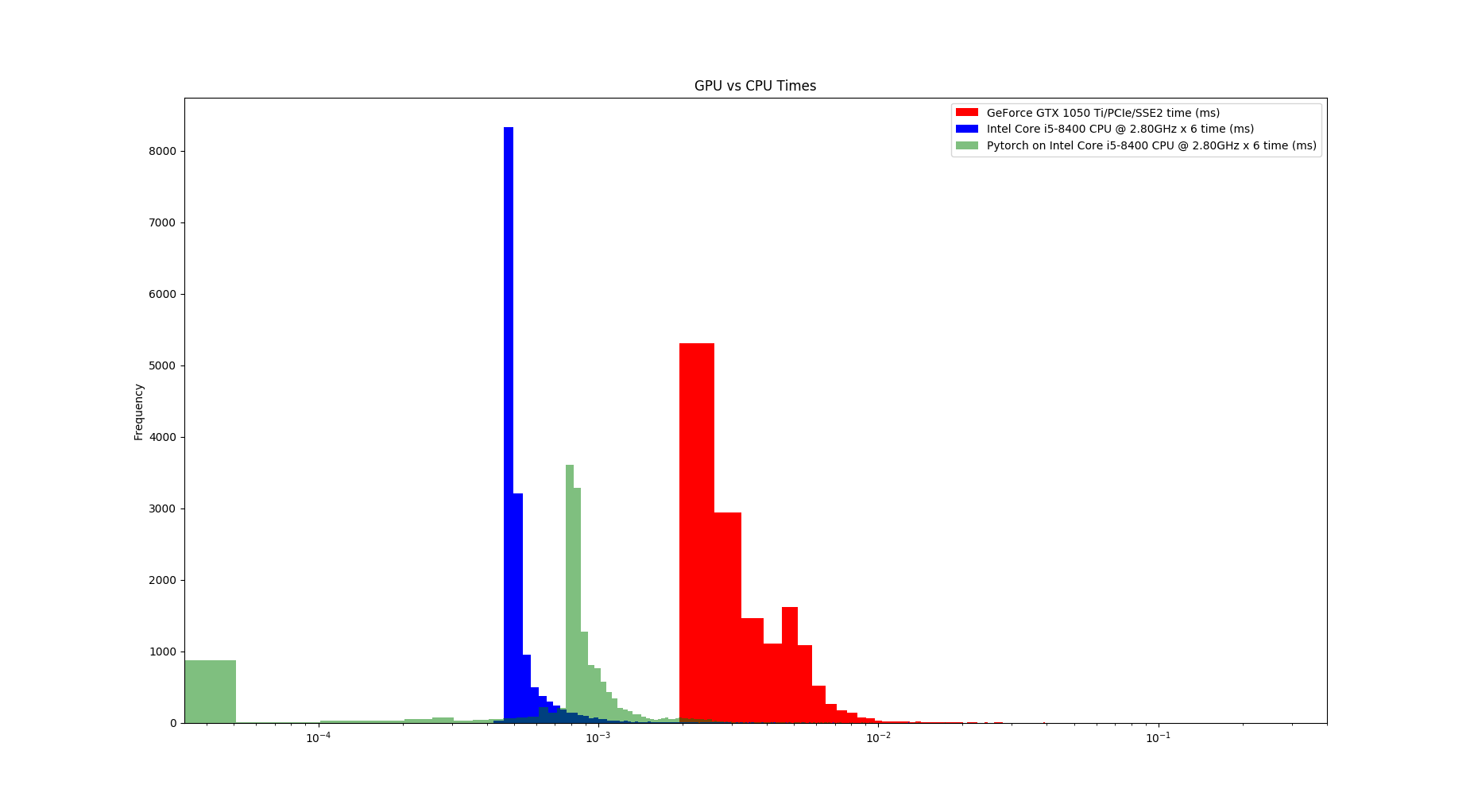

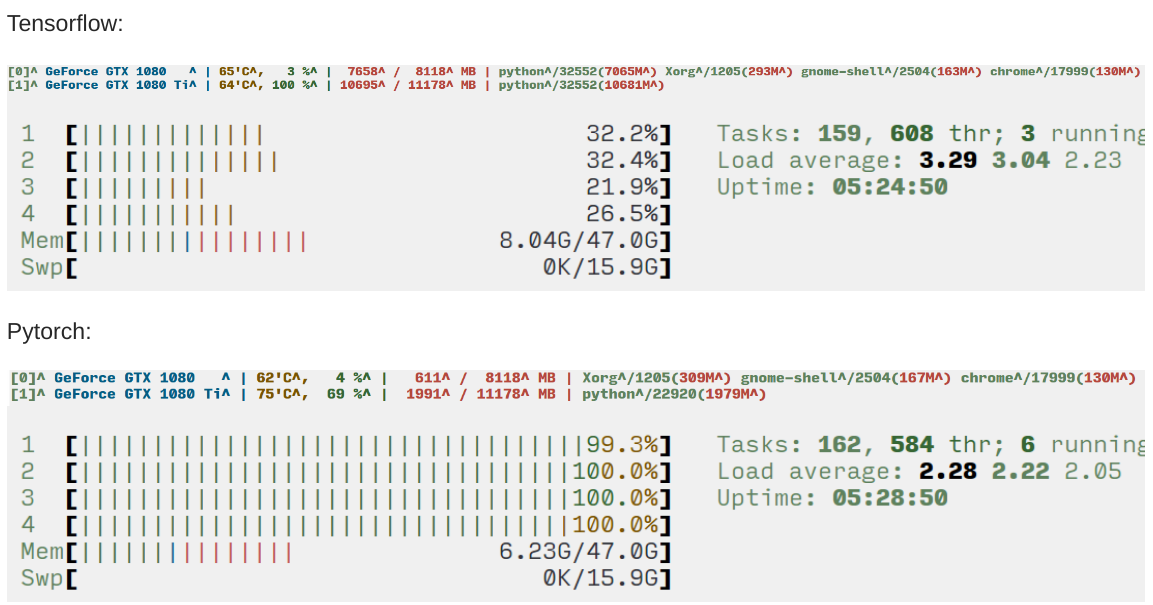

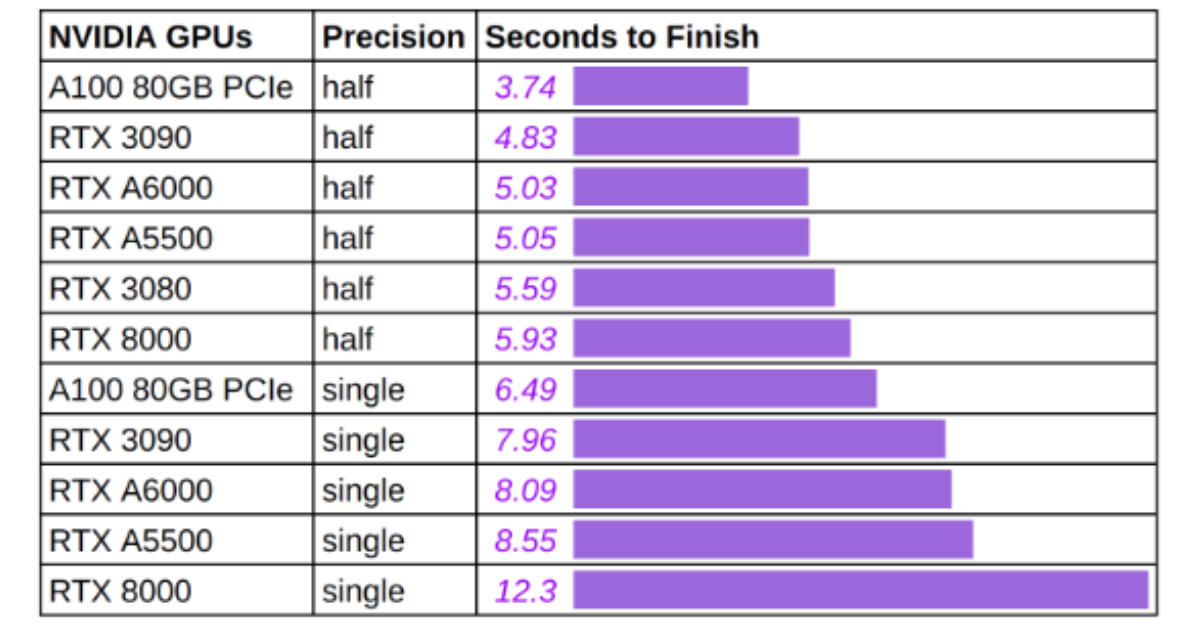

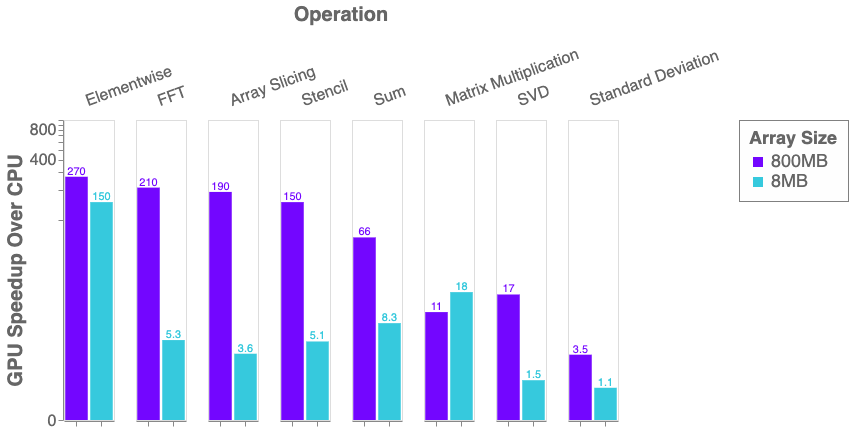

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science