What are the downsides of using TPUs instead of GPUs when performing neural network training or inference? - Data Science Stack Exchange

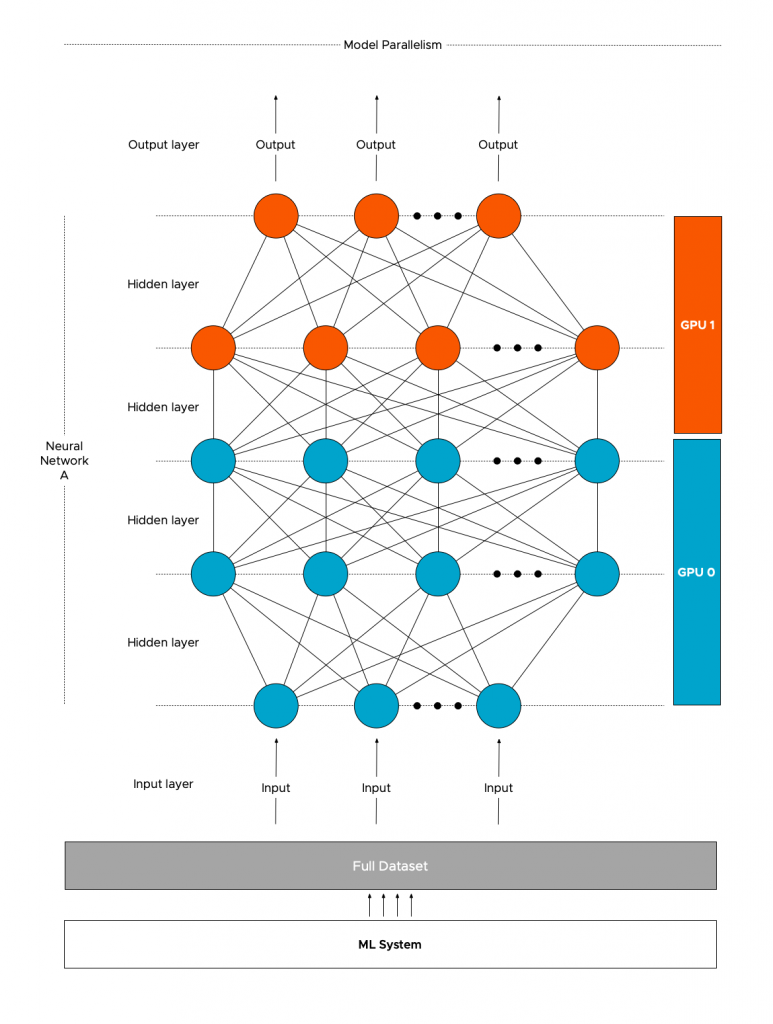

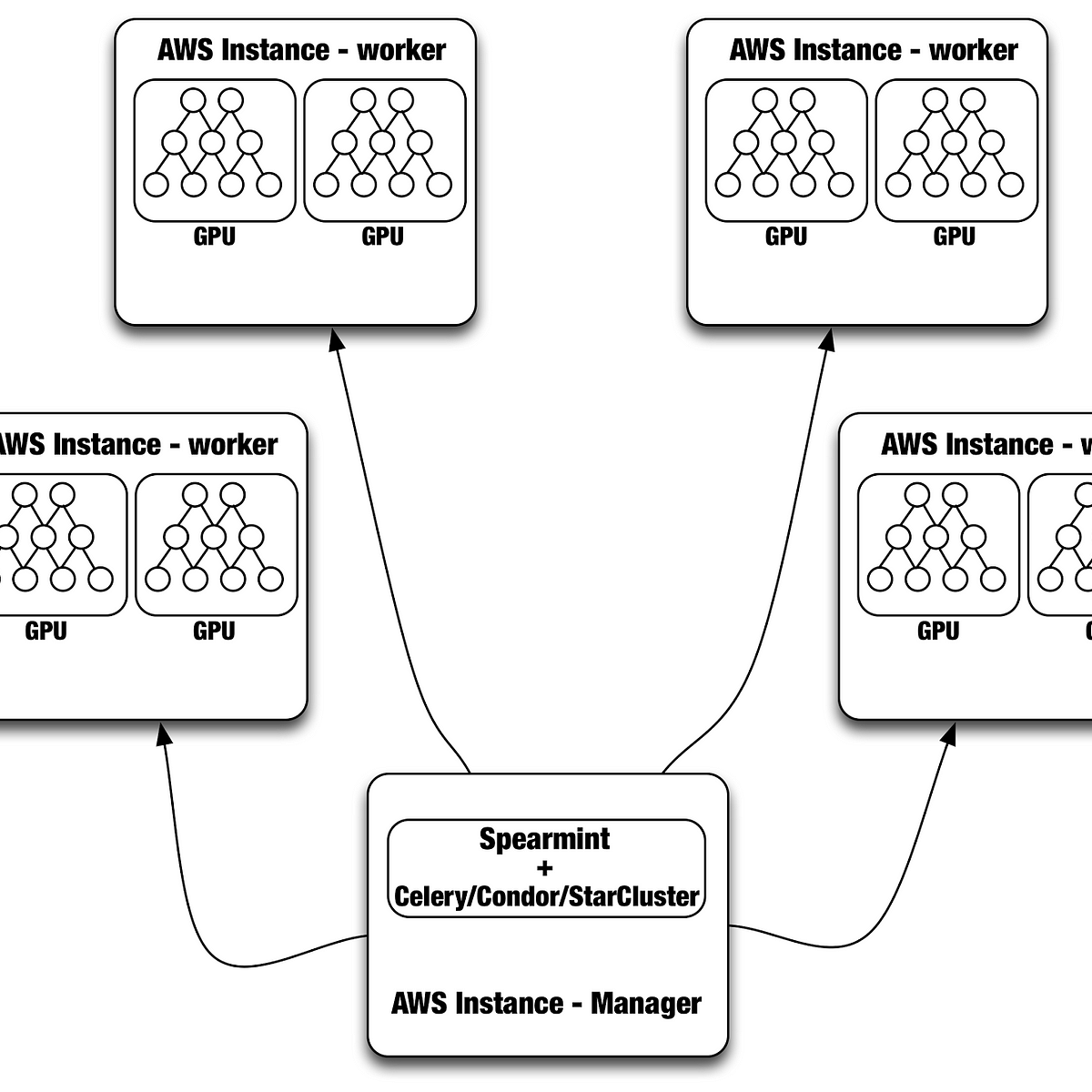

Distributed Neural Networks with GPUs in the AWS Cloud | by Netflix Technology Blog | Netflix TechBlog