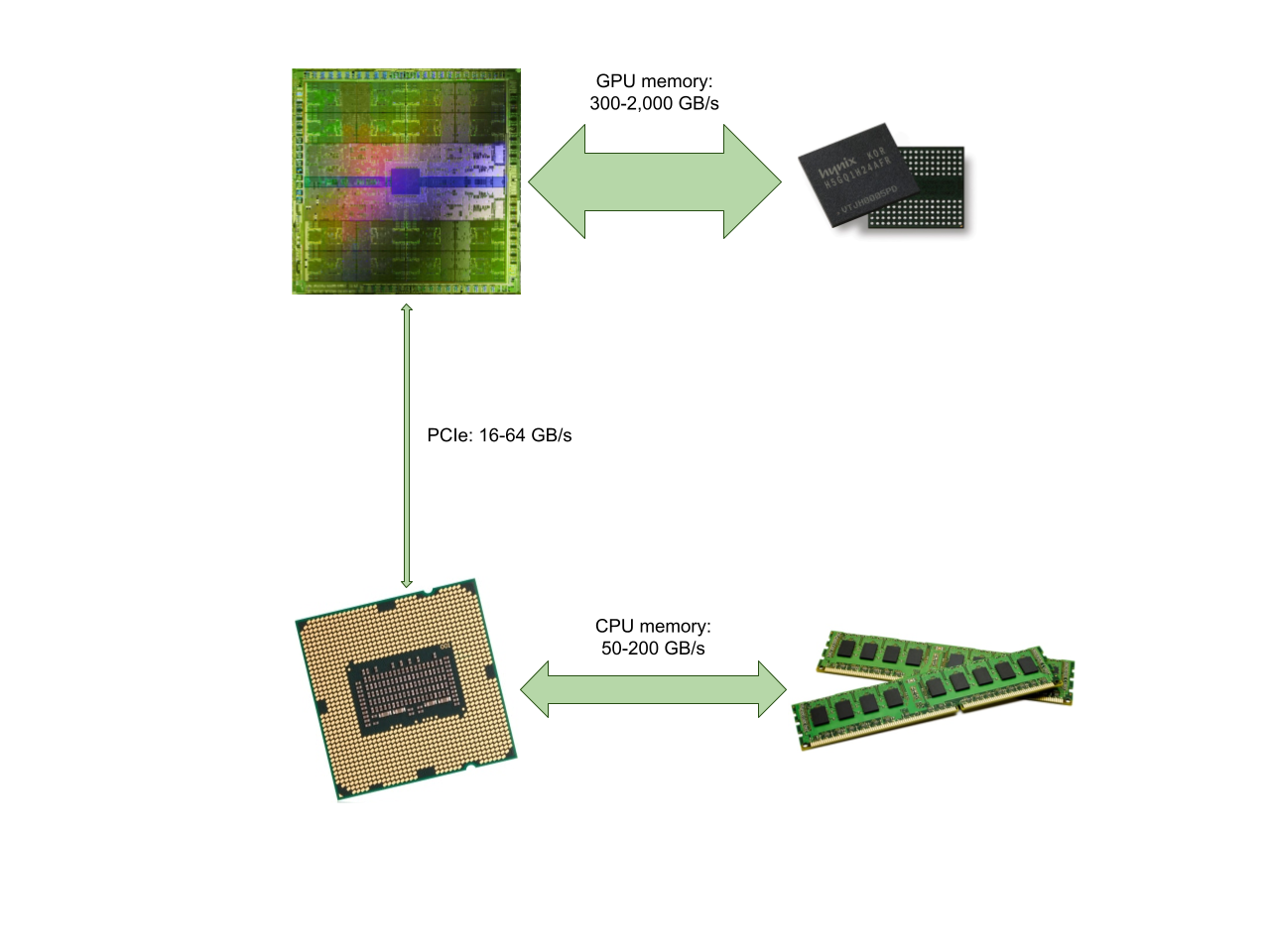

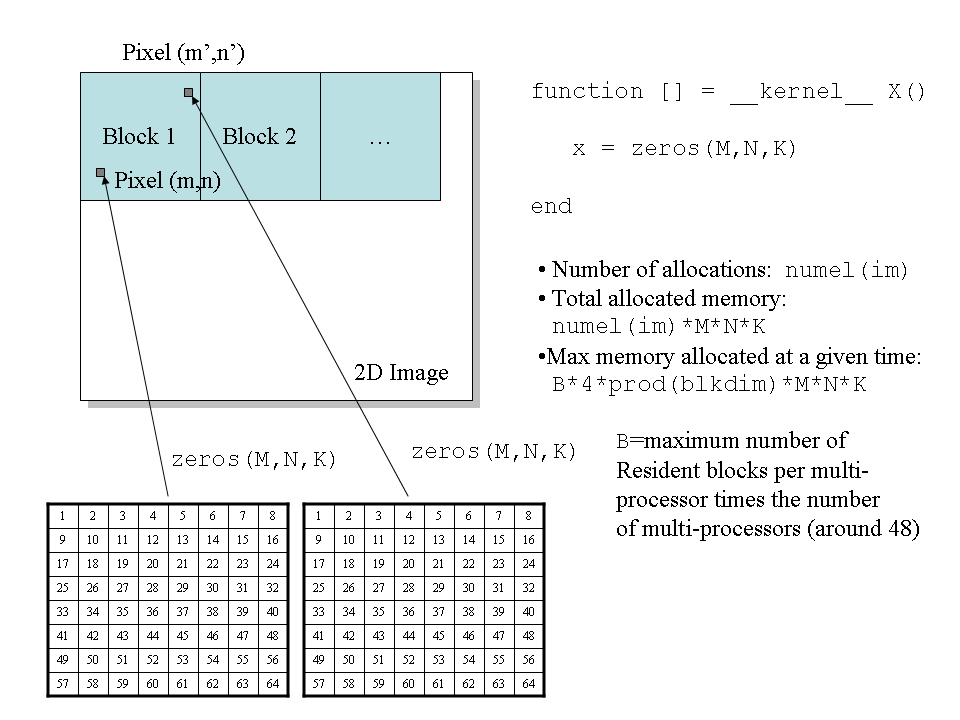

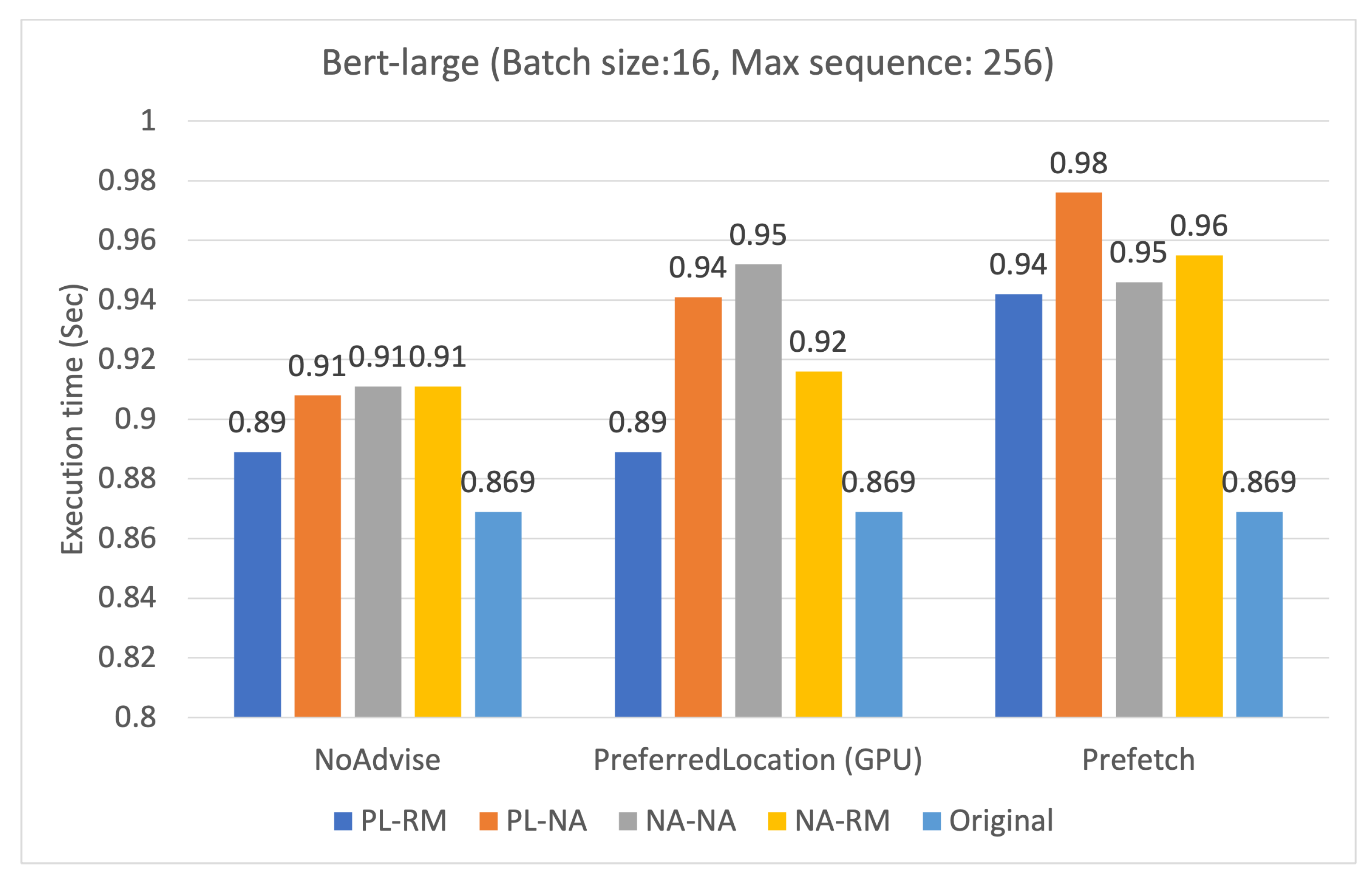

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

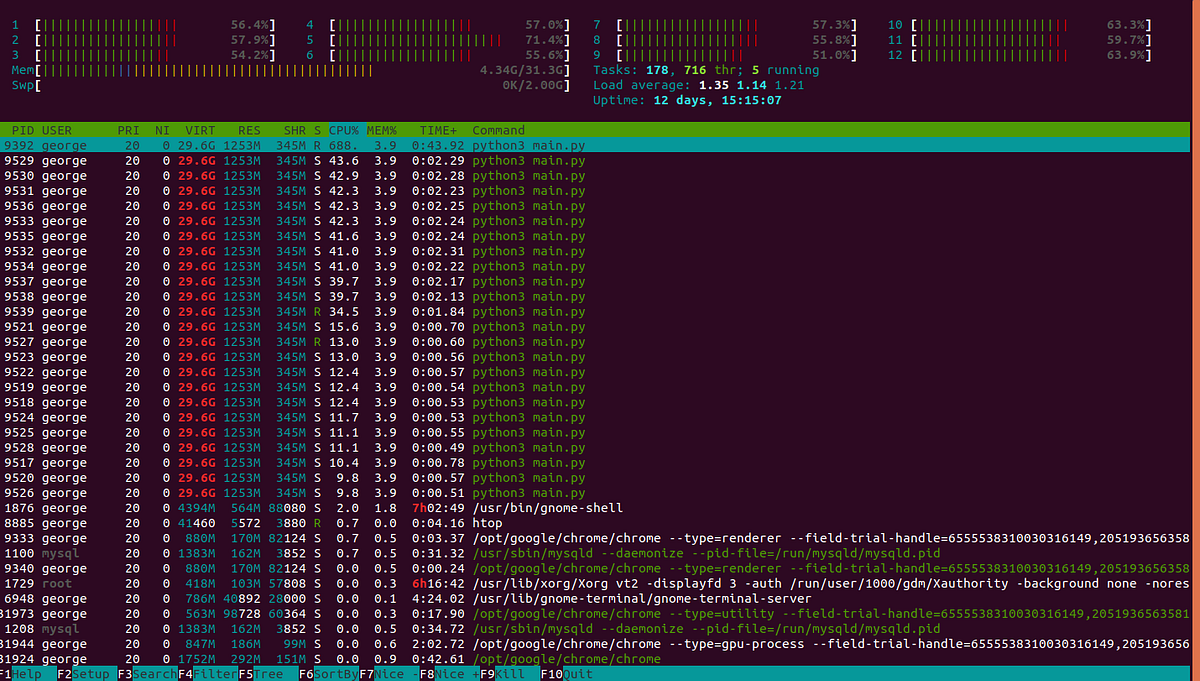

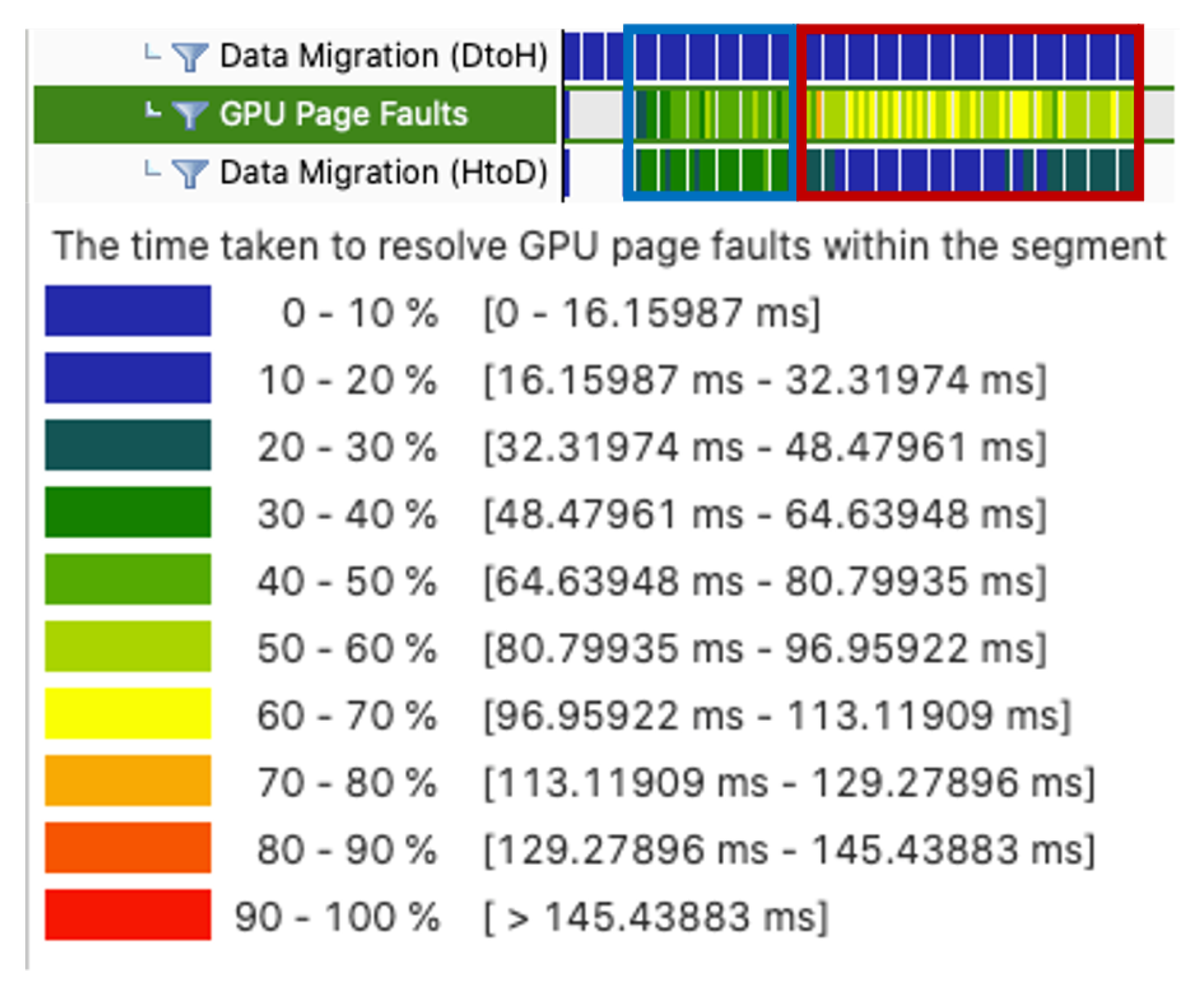

deep learning - Pytorch: How to know if GPU memory being utilised is actually needed or is there a memory leak - Stack Overflow